Back in 2018, now five years ago, we published a blog post describing our 400+ node Elasticsearch cluster. In that post we brought up an important topic:

So far, we have elected to not upgrade the cluster. We would like to, but so far there have been more urgent tasks. How we actually perform the upgrade is undecided, but it might be that we choose to create another cluster rather than upgrading the current one.

Well, the day to upgrade finally came.

A few weeks ago, we completed the last steps in terminating our old, 1100 nodes large Elasticsearch cluster and its surrounding infrastructure. At that time, all dependent applications had already been routed over to our new Elasticsearch cluster that would replace it. This meant that the shutdown itself was a non-event for our users, but for us in the team, it was an important milestone and a worthy ending to a successful multi year project.

We decided to write this blog post series to share the learnings we made and describe some of the challenges we had and how we overcame them.

This blog-post describes the project overview, the challenges we had with the old cluster and the constraints we operated within. The other posts in this series will deep dive into other interesting topics. We plan to release one new post at least every week up until Christmas, with roughly the following schedule

So stay tuned for more.

Background and use cases

As described in prior blog posts, we at Meltwater are users of the Elasticsearch open source search engine. We use it to store around 400 billion social media posts and editorial articles from all the news sources you can imagine. The platform then provides our customers with search results, graphs, analytics, dataset exports and high-level insights.

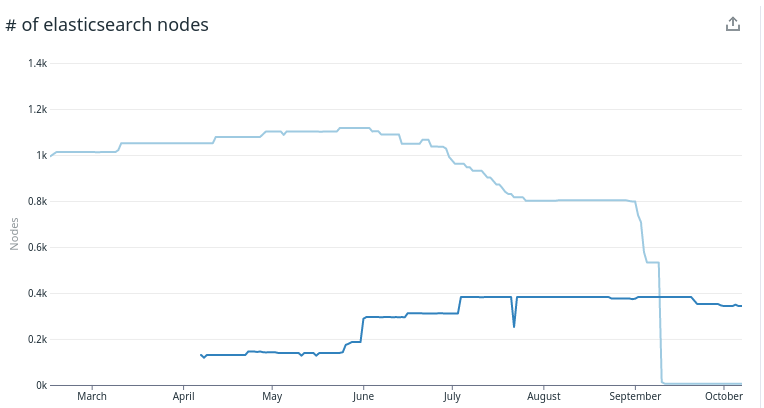

Up until last month, this massive dataset was hosted on a custom forked and in-house maintained version of Elasticsearch. The cluster contained close to 1100 i3en.3xlarge nodes running in AWS. The cluster had many thousands of indices, almost 100,000 shards and close to 1PB of primary data, reaching over 3PB with replicas.

Today, after the upgrade, the dataset is actually a bit larger, 3.5PB (for reasons that we will explain in a later blog post) and the total number of nodes is now “only” 320 (mainly i3en.6xlarge instances). Perhaps most importantly, we now run an officially supported version of Elasticsearch.

Reasons for the upgrade

For older versions of Elasticsearch, Elastic and Amazon advise against running clusters larger than 100 nodes. But as you have probably gathered by now, we passed that limit many years ago. We kept patching and improving our custom version to keep serving our business growth and ever-expanding use cases.

However, as our cluster grew, we eventually began to feel the limitations of running such an old version. For example, the version we used did not handle incremental updates to the cluster state very well. Elasticsearch sent around the entire state, which in our case was >100mb large, to every single one of our 1100 nodes, for every single state change. This meant that a significant bottleneck was our 100 Gigabit network bandwidth on the master nodes.

Some other trouble areas running the old Elasticsearch version were:

Unpredictable behavior when nodes and shards were leaving/joining the cluster

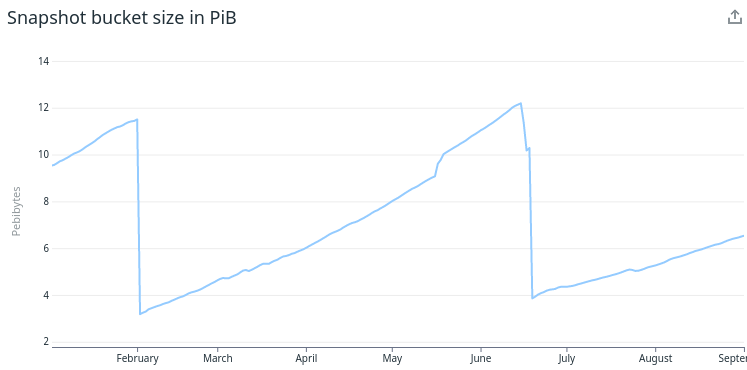

The S3 backup handling

- Due to inefficiencies in how the old version of Elasticsearch handled S3 type repositories, we were unable to delete old backups fast enough to keep up with the inflow of new data. So our backups kept on growing and growing in size.

- We had to periodically switch the S3 bucket and start over with a new snapshot from scratch a few times per year to keep the storage costs at an acceptable level.

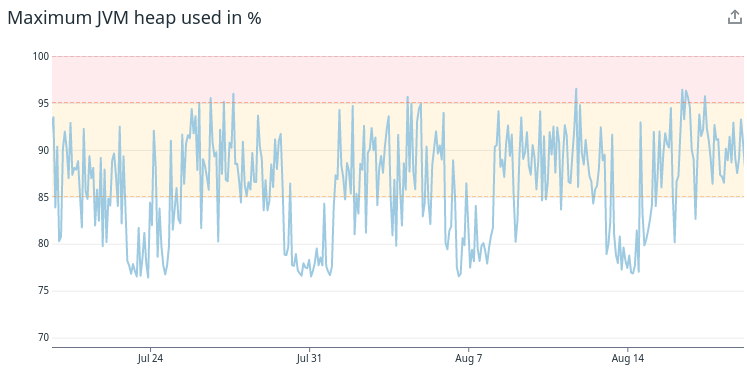

Spiky heap usage combined with insufficient (or lacking) circuit breakers and safety nets

We had numerous occasions where a poorly written user query was allowed to execute and allocate too much memory, and caused nodes to enter gc hell and crash.

GC hell means that a node is spending all its time and cpu on garbage collection and does not have time to do other useful work, such as responding to cluster pings or executing searches. A node that enters this phase slows down the entire cluster as the master, and all other nodes, get timeouts on their requests to the node in gc hell. Java garbage collection is a fascinating subject, but out of scope for this blog post.

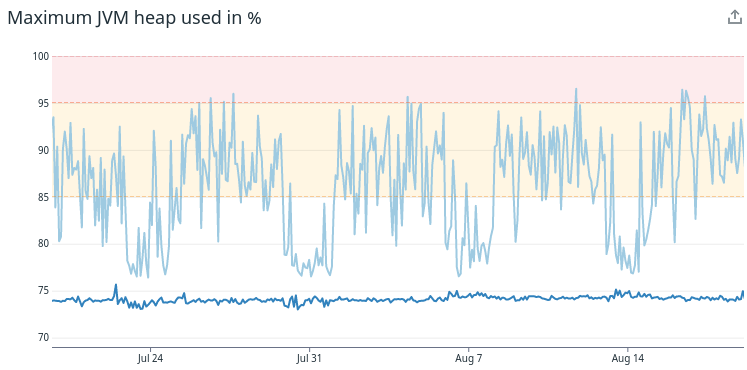

- Not to mention that even when nodes were operating in “normal” mode, the heap usage looked very spiky and unpredictable.

Too much of our segment data was stored on the heap

- We used >10TiB of RAM, or about 40% of our total available Java heap in the whole cluster for segment data alone. The Java heap (not disk or cpu) was our primary scaling metric for the whole cluster

Unable to use newer versions of Java and more modern garbage collection implementations.

This forced us not to use more than 30GB java heap per node, even if the OS could have several 100s of GB RAM available.

In turn, this, forced us to scale out (= more machines) instead of scaling up (=more powerful machines), which is not always the most optimal choice

All of the above issues, and more, we believed would be improved if we upgraded to a later version of Elasticsearch.

Last but not least, we also knew that Meltwater would continue to grow. We knew that we would have a continuous need to support even more data, more users and more use cases in the future, and we felt that we were approaching a hard limit on the size of the cluster on the current Elasticsearch version.

Therefore, the organization finally decided that it was time to invest in a the mutli-year project to upgrade our Elasticsearch cluster.

Where did we land?

As previously explained, we will share a lot more on how we did the migration but we wanted to start by giving you a sneak peek into the benefits the upgrade gave us in the end, and it is best explained in a number of figures.

In all figures below light blue = the old cluster, and dark blue = the new cluster.

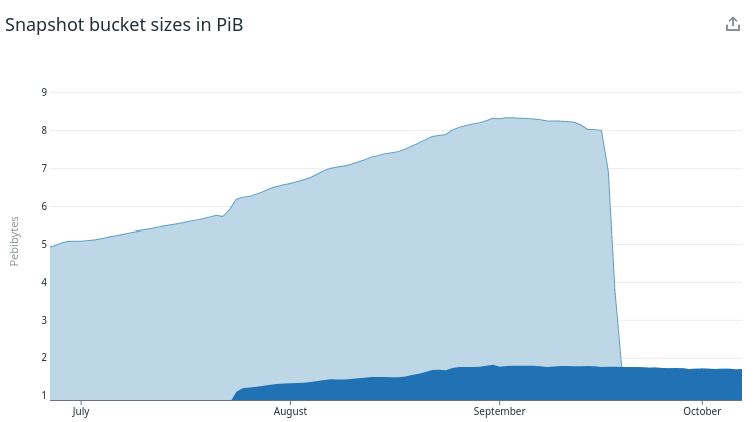

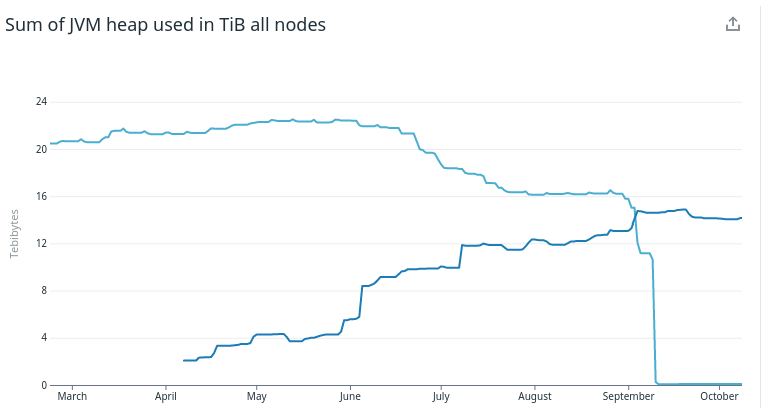

The left figure shows that we were able to run with ~320 nodes instead of >1100. It also shows how long we were running with two parallel clusters and the gradual scale up/scale down we could do as more and more data and searches were moved from the old into the new system.

The right figure shows that the uncontrolled growth of the snapshot size was indeed fixed in the new elasticsearch version and we can now keep the backup costs down.

These two figures show the improvements that were made in heap usage patterns. The left figure shows that the heap usage is basically flat for the new cluster. The right figure shows that the sum of the heap usage is also lower now (14 TiB vs 22 TiB) even though that was not a goal by itself.

We can also see in the above figures that we have been able to further optimize and scale down the new cluster after the migration was completed. That would not have been possible in the old version where we always had to scale up due to the constant growth of our dataset.

But, let’s not get ahead of ourselves further than this. This blog post series is just as much about how and why we did things and not only about what we gained in the end.

So, back to the story about the migration.

Rollout requirements

Meltwater is a global company, which means that our systems need to operate and be available 24 hours a day, 7 days a week.

While we can schedule occasional downtimes for maintaining parts of our system, taking down the engine that powers all of Meltwater’s search and analytics functionality was simply not an option. Therefore, any rollout strategy without a safe rollback strategy was not acceptable.

Given this constraint, our only option was to plan an upgrade path that would make the process invisible to our customers, to our support organization and all the other 30+ development teams we have in the company.

Further, we also had a requirement to do an incremental rollout where we could switch individual queries/users/applications back and forth between the new and the old system.

So in short, we had to design a gradual, reversible, no-downtime rollout for the whole system.

Stay tuned for the next part in this series to learn about how we set out to solve for those requirements by using two Elasticsearch clusters running in parallel serving the same data.

Up next: Part 2 - Two consistent clusters