AWS is a wonderful ecosystem in terms of infrastructure, but the UX and the sheer number of domain specific terms can be overwhelming when you are trying to understand how to do things. Migrating a database from one account to another turned out to be more complicated than we first assumed. In this post I am documenting every small steps that I found to be missing from other guides.

The Challenge

Our team was handing over a piece of software to another team in the Meltwater Engineering organization. The way we are structured, each team maintains their own AWS account. Our piece of software was depending on a DynamoDB table that now needed migration to the new account. We spent some time researching various methods, and settled on using AWS Glue, a tool AWS provides to script moving data around easily.

Most of the guides and blog posts we found were either very high level, too vague, or tailored to a different use case. The guide we decided to follow, while good for our use case, still left out a lot of details. Those details may be obvious to the experienced AWS sysadmin but for us as developers it meant a lot of trial and error to be able to follow. The method described in this blog post is largely what is suggested in how can I migrate my DynamoDB tables from one AWS account to another, but written up in a way that would have helped us when we attempted it.

DynamoDB Migration Step-by-Step

This guide assumes a simple backup to S3, with no real time synchronization between the two databases. We are not handling delta, since our use case did not require it.

Setup S3 bucket in target account. No public access is needed, nor any additional or special settings. Once the bucket is created, go to the Permissions tab in the bucket console, and add the account number and exporting role on the source account to the ACL. For the way our AWS is set up, this role is the Developer role - meaning our principal had the form of “role/Developer” rather than “user/Dave”.

See Requesting a table export in DynamoDBSetup your current role in the source account to have write access to S3, by adding an S3 policy, as per the link above. As in step 1, the role that needed this access is the “Developer” role.

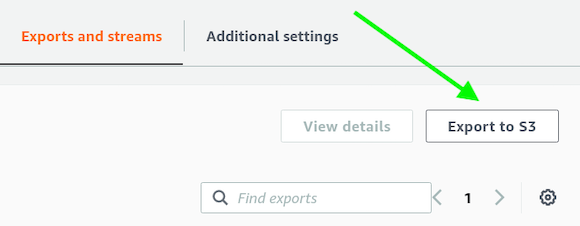

Export your table using the “Export to S3” button. Choose the bucket created in the target account.

Fetch coffee while the export runs. From this step, all is done in the target account.

Once the export is finished, change ownership of the items in the S3 bucket to the target account.

a. Get files from repo (use

awsudo)$ aws s3 ls s3://<target-bucket> --recursive | awk '{print $4}' > files.txtb. Apply bash script on the list of files (use

awsudo)#!/bin/bash input="files.txt" while IFS= read -r line do aws s3api put-object-acl --bucket <YOUR-BUCKET> --key "$line" --acl bucket-owner-full-control echo "$line" done < "$input"Create the DynamoDB table you wish to import to. Set provisioning to “On-Demand” if you wish your import to run as fast as possible - if you want it slower you can fix the provisioning at a lower level, but then you might also consider lowering the throughput of the import job (see later) to minimize throttling events.

Time to set up AWS Glue! First, create a Glue Service Role in IAM, as documented here.

I named mine “glue-role”. In addition to the policies recommended in the guide, add AmazonDynamoDBFullAccess for writing rights.Access the AWS Glue product. Create a database source that crawls your S3 bucket, like this.

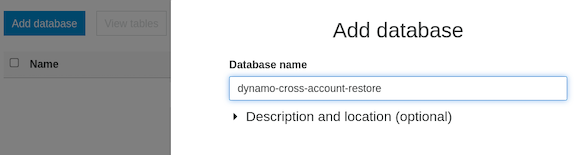

a. Go to AWS Glue -> Databases. Click “Add database”.

b. Go to AWS Glue -> Crawlers. Click “Add crawler”. Choose any crawler name you like, and press “Next”.

c. In “Specify crawler source type”, ensure that crawler source type is “Data stores” and choose whether you want the crawler to run on all new folders or just on new folders (for this use case it probably does not matter).

d. In “Add a data store”, select “S3” from the drop down. You don’t need to add a connection. Add the path to your S3 bucket, should look something like this:

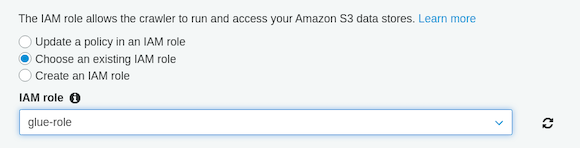

s3://<bucketname>/AWSDynamoDB/<hash-id>/datae. Click next until you get to the “Add IAM role”. Select “Choose an existing role” and select the glue-role created in step 7 from the drop down.

f. Choose your schedule - run on demand is fine.

g. Select the database created in 8a as the output target. You can leave the other settings as is.

h. Review and finish.

i. Run the crawler by selecting it and pressing “run crawler” on the crawler overview page. You should now see tables with data in your Glue source database.

Now to the fun part! We will create a Glue job using a custom python script to import the data from the Glue source to your new DynamoDB instance.

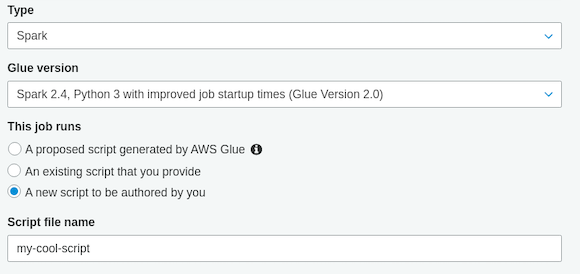

a. Go to AWS Glue -> Jobs and press “add job”. Give the job a name, and choose your previously created glue-role from the IAM drop down. Select “A new script to be authored by you” as input for the job.

b. Click next. Do not add any connections (the script will provide those), just click “Save job and edit script”.

c. You will now find yourself in a code editor environment. Paste the following script and edit it accordingly:

import sys from awsglue.transforms import * from awsglue.utils import getResolvedOptions from pyspark.context import SparkContext from awsglue.context import GlueContext from awsglue.job import Job args = getResolvedOptions(sys.argv, ['JOB_NAME']) sc = SparkContext() glueContext = GlueContext(sc) spark = glueContext.spark_session job = Job(glueContext) job.init(args['JOB_NAME'], args) # Initialize the Dynamic frame using Glue Data Catalog DB and Table. Replace <GlueDatabaseName> and <GlueTableName> with Glue DB and table names respectively, as created in step 8a. Source = glueContext.create_dynamic_frame.from_catalog(database = "<GlueDatabaseName>", table_name = "<GlueTableName>", transformation_ctx = "Source") # Map the source field names and data types to target values. The values below are hypothetical - replace this with the schema of your source database. Mapped = ApplyMapping.apply(frame = Source, mappings = [ ("Item.date.S", "string", "date", "string"), ("Item.count.N", "string", "count", "double"), ("Item.name.S", "string", "name", "string"), ("Item.id.S", "string", "id", "string")], transformation_ctx = "Mapped") # Write to target DynamoDB table. Replace <MyRegion> and <MyDynamoDBTable> with region and table name respectively glueContext.write_dynamic_frame_from_options( frame=Mapped, connection_type="dynamodb", connection_options={ "dynamodb.region": "<MyRegion>", "dynamodb.output.tableName": "<MyDynamoDBTable>", "dynamodb.throughput.write.percent": "1.0" } ) job.commit()Save the job, and run it. If your logs show access errors your glue role might need additional policies (dynamo writes most likely). If you get connection throttling or timeouts, try lowering the

dynamodb.throughputsetting in the DynamoDB connection settings in the script above.

To monitor how your job runs, open an additional tab to your DynamoDB table dashboard and look at the “Metrics” tab, where you can see how many writes you have and if there is throttling.

To increase write speed, you can exit the job IDE and instead check “Edit job” on the Jobs tab, where you can add concurrency and/or more workers. The default is 1/10 - we have been successful running with concurrency 2 without throttling, but this depends on your type of data and database schema.Have more coffee. The job will stop when it is done. Note that the dynamo table will only automatically measure the total number of items every 6 hours, so if you are unsure whether all of your items were correctly inserted you will want to manually scan the DynamoDB table for current count, using the button on the DynamoDB console.

That is it. You are done. This is a bit hairy and you did well! Congratulations :)

I remain convinced that I have overlooked an even simpler solution to how this can be done - it surprised us slightly that there was no simple export/import option. If you are aware of a better way to migrate a database from one AWS account to another, we would love to hear about it in the comments below.