Meltwater recently released a new product feature called Signals, which helps our customers to identify business-critical events.

In a previous post we introduced the concept of record linking, and presented our first approach for merging information from multiple sources for our Knowledge Graph.

In this second post, we share how we improved those initial models based on user feedback and analysis of learning features, and also present formal evaluation metrics.

Improved Organizations model

For context please refer to our previous blog post where we introduced our Record Linking Pipeline based on blocking, a machine learning classifier and agglomerative clustering. That post also describes our first models for linking similar organization and person entities.

As the Knowledge Graph (KG) was staged to serve applications, more human-in-the-loop effort was directed towards quality control. We received feedback in the form of manually marked company records of suspected duplicate organization vertices in the KG (false negatives for record linking).

It was a logical step to augment our training data with these new instances, since those represent more real-life use-cases than the synthetic examples we bootstrapped our initial model from.

We also wanted to improve what is learned by the model itself, so we decided to revise the training features as well. To achieve this, we split the new data into training and test parts and tested improvement ideas after analyzing and categorizing the original model’s false positive and false negative predictions.

We devised an improved normalization method for HomepageURL attributes which discards common URL path patterns (eg. /home, /index.html, /en-us etc.) so the model can focus on paths that do make a difference (eg. amazon.com vs. amazon.com/dash). Based on this, we added a new 3-value binary feature for exact matching (representing missing values on either side by a score of .5). We also introduced a new feature for the 3-value matching of the top-level domains of URL pairs in the hope this would help to distinguish international subsidiaries, something we need to do frequently, eg. amazon.com vs. amazon.co.uk.

For OrganizationName attributes, we added a new binary feature that represents whether either of the two names is a prefix or suffix of the other, eg. Dominion vs. Dominion Energy, Digital Realty Trust vs. Digital Realty. Finally, we added three brand new 3-value binary features for the exact matching between normalized social media handles, derived from the companies’ Facebook-, Twitter- and LinkedInURL attributes.

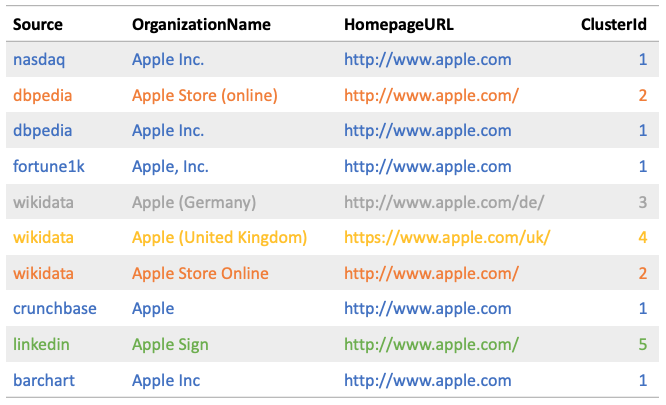

The new model trained on the new data with the improved features was able to correctly assign the following 10 Apple Inc. subsidiaries and products to their correct 5 clusters, something the old model failed to do (it assigned all to the same single cluster):

Table 1: Records related to Apple Inc. from 7 sources (colors indicating correct clustering)

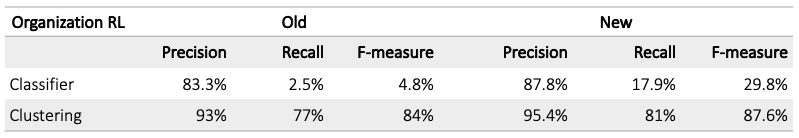

For formal evaluation, we compared classification and clustering metrics using the old and the new model on the same datasets: 640 company pairs from the human-in-the-loop annotation for the similarity classifier, and a 6-source 50-company gold standard for end-to-end clustering. The new model managed to improve performance on all metrics:

Table 2: Evaluation metrics for organization record linking, before and after the improvements

Improved Persons model

We made 2 major changes to the original model for the linking of person records:

- New, manually annotated (thus more precise) training set directly sampling (thus better representing) all our sources

- New classifier features for affiliation information (organizations where the person worked/works, job titles etc.)

The new training set was created by taking records representing leaders (CEOs and other executives, directors, board members etc.) for 1000 prominent US companies from one of our sources, and then manually finding the equivalent IDs from 3 other sources, yielding 1416 positive training pairs. 1869 negative training pairs were automatically generated by pulling in further records from all sources that had the same blocking keys as the 1000 core records but which were not in the positive clusters. These were also manually verified afterwards. The train-test split was 75%-25%.

The new features representing persons’ affiliations (eg. places of work) are based only on attributes that are found in all sources in the new training data (which meant we discarded features based on the BirthDate and Gender attributes in the previous version). 5 new features compare the affiliated organization’s normalized attribute values (places the person works/worked at):

- 2 features based on the OrganizationName of the affiliated company. These are the same as the revised OrganizationName features in the organizations model (minimal Damerau-Levenshtein and 3-value match on the normalized names)

- 2 features based on the HomepageURL of the affiliated company, again taken directly from the organization model (minimum normalized Levenshtein and 3-value TLD suffix matching)

- 1 new feature based on the Twitter handles extracted from the TwitterURLs of the affiliated companies (3-value exact matching).

A further new feature was introduced to represent the similarity between job titles of the affiliations, for which normalization consisted of:

- Keep only first 100 characters (to filter noise from some sources)

- Use only first 10 tokens (where the actual job titles probably appear, followed by the bio of the person)

- Segment by commas and ”&”, eg. “President & CEO” -> [“President”, “CEO”]

- Slugification to normalize capitalization, accents and punctuation differences

- Remove prefixes like co-, interim-, …

- Resolve abbreviations like ceo, cfo, cio, cto, coo, cmo etc.

- Replace certain suffixes, eg. chairman, chairwoman -> chairperson

Person records have on average 3.2 affiliation attribute values in our data. The feature that represents comparison between job titles of two person records works by first filtering out affiliations where the (normalized) company name is not the same, then returning 1.0 if there is at least one shared normalized job title (0.0 if not and .5 if either side is missing).

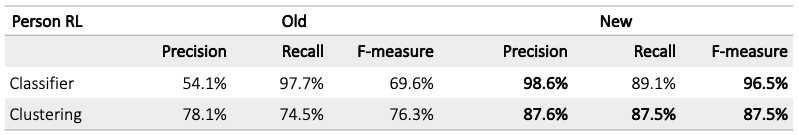

To evaluate the new model and to compare it against the old one, we used the test split of the new annotated data for both:

Table 3: Evaluation metrics for person record linking, before and after the improvements

Improved Blocking

The blocking algorithm used in the first organization and person record linking releases was based on the simplifying assumption that each record has exactly 1 blocking key. This allowed blocks to be calculated by a simple groupBy() Spark operation. Whenever a record had multiple values for the attribute the blocking key is calculated from (eg. multiple PersonName aliases or multiple HomepageURL values), we picked a value at random, which is not optimal. In addition, to tackle missing blocking attribute values we needed to generate blocking keys from multiple attributes of the same record, eg. both from the HomepageURL and the OrganizationName of an organization.

We solved this challenge of generating blocks from records with multiple blocking keys by using a graph representation. In the blocking graph, vertices correspond to record ids and we add undirected edges between every 2 record if the they share a blocking key-value pair, eg. blkey_org_name=apple (the value of the blocking key generated from the OrganizationName attribute is “apple” for both records). We then identify weakly connected components on the full graph via connected components analysis. The connected components are the blocks on which we want to run agglomerative clustering, separately inside each. We used GraphFrames, a Python library supporting distributed graph operations similar to Spark’s GraphX API but based upon Spark DataFrames, rather than RDDs.

Summary

We presented how we implemented record linking for Meltwater’s Knowledge Graph using machine learning and agglomerative clustering in an Apache Spark framework. For a full account of that please read The Record Linking Pipeline for our Knowledge Graph (Part 1).

We have improved both the model for organization and person record linking by

- re-training the models using human-in-the-loop support to better represent real-life data

- revising and extending the models’ feature sets after careful analysis.

These changes improved the initial organization model significantly: by 25% (classifier F-score) and 3.76% (clustering F-score), and the person model by 26.9% (classifier F-score) and 11.2% (clustering F-score). All this enables higher quality content in the Knowledge Graph and the applications built on top of it, including Signals.

Acknowledgements: Record Linking was created by Márton Miháltz, Roland Stiller and Bhaskar Chakraborty.

References

- Anja Gruenheid, Xin Luna Dong, Divesh Srivastava (2014): Incremental Record Linkage. In Proceedings of the VLDB Endowment. (also see the slides)

- Dusetzina et al (2014): An Overview of Record Linkage Methods. In Dusetzina et al: Linking Data for Health Services Research.

- Felix Naumann (2013): Similarity measures. IT Systems Engineering, Universitat Potsdam.

- Aviad Atlas (2018): Hybrid Fuzzy Name Matching.