One of our teams at Meltwater was recently faced with a problem that required relatively simple tasks applied to a large volume of data. To solve this we experimented with a pattern we call micro-pipelines, which are a sequence of microservices that work together to create efficient, fault tolerant systems.

This post provides an example of how we designed and built such a micro-pipeline.

The Challenge

Our team was tasked with taking data from several different sources and finding relationships in items in them. The system needed to monitor website traffic data, with about 600 million events per day, identify data points we were interested in, match them against content in our existing system from a few different data sources, and finally perform a few analysis tasks and store the results. For each task, the logic to find these items, match them, and perform analysis was relatively simple, however the volume of data lead to various challenges.

In our initial design discussions, we had a few ideas that seemed workable, but we noticed two critical flaws in building an application to handle this volume of data.

The time to receive data from one source and lookup the relevant matches in the other sources was taking several seconds per request. This would require us to run about 21,000 processes simultaneously for 24 hours a day. From a monitoring and cost standpoint, that seemed like a nightmare to us.

Any failure in the process led to a loss of data and wasted resources. If an item in the process failed a few seconds in, it had to be started over. Assuming even a modest failure rate would make our previous 21,000 process number jump even higher.

We started looking at a variety of tools for managing a large volume of similar processes like container orchestration, and auto scaling EC2 instances.

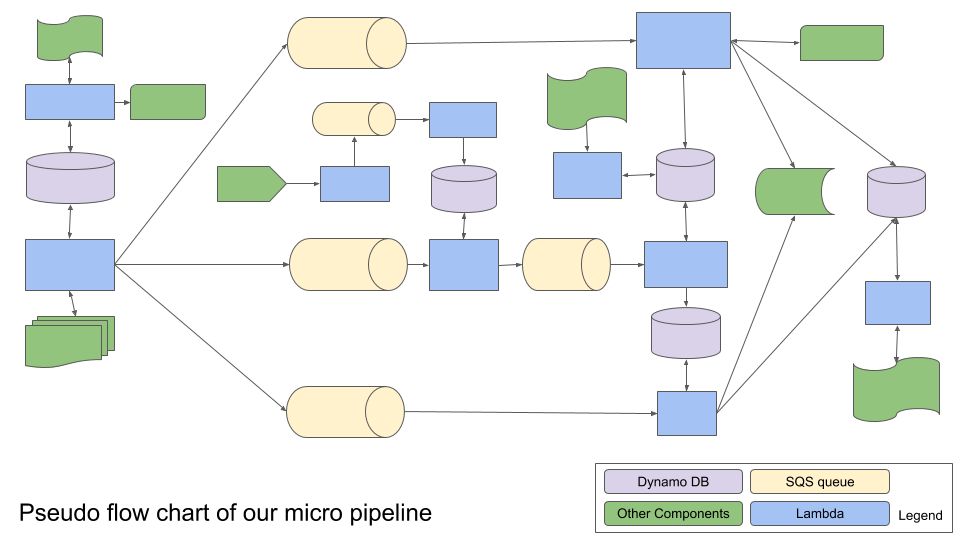

We also considered AWS Lambda functions. Lambas were initially disregarded as we thought they would time out due to the long execution times of some of our more complex processes, but we were intrigued by the variety of ways to trigger and connect AWS Lambda functions with one another. With this in mind, we re-examined our original design and broke each part of our filtering process and matching algorithm into a discrete step. This resulted in the giant flow chart shown below.

We decided to break up our large application into a series of microservices (Lambdas) that execute small steps in the pipeline and use SQS, Dynamo, or SNS to trigger the next step in the process.

When figuring out how to connect all of these services together in an optimal way, we considered the following two questions for each step in the process.

1. Will this step filter or reduce the amount of data passed to the next step?

Our process has some simple steps that filter data down based on user preferences or the source of the data, as well as some more complicated steps that need to make API or database calls. To make the system as efficient as possible, we wanted to ensure that steps that reduce the total volume of data happened early in the pipeline. We also tried to keep the early filtering Lambdas as simple as possible. For example, rather than building one service that filters on 4 properties of the data, we rather built 4 small services that were responsible for a single filter each. Some examples of good candidates for early filtering are dropping tweets from accounts with no followers or stripping analytics data from countries our users aren’t monitoring.

Our goal was to spend as little time as possible on data that didn’t have value and ensure that later, more complicated processes didn’t get bogged down with tweets from bots or analytics from the Kingdom of North Dumpling (a failed country that caused a very tricky bug for us a few years ago).

2. Is the end result of this step a valuable piece of data or a trigger for another step?

When chaining these processes together in a pipeline, we needed to pass data between them. AWS provides different tools for data storage, queueing, notifications, and file storage, so we needed to narrow down exactly what each step would accept as inputs and produce as outputs.

Our two primary concerns with passing data and events between processes were efficiency and fault-tolerance. Systems like SNS and SQS provide very efficient ways to pass events and small amounts of data between processes, but aren’t intended to store data long-term and not ideal for fault recovery. Dynamo is a great tool for storing larger data items and provides permanent data storage unlike SQS, but was slower than having the data passed in as part of the Lambda trigger.

We took a look at each step in our process and used SQS as an output target when we were outputting a single value like an id of an item in a database, a URL, or some other data item that had little debugging or recovery value on it’s own. We then use Dynamo to store data for outputs that were either larger objects, or points where we could easily restart the process in the event of a failure.

Organizing and Deploying the Micro-Pipeline

At the end of this process, we had a system that could handle our projected load efficiently and recover from a variety of system failures. We also had a system that was easy to update since each step could be independently tested and replaced. Unfortunately, we were also left with the task of deploying and updating a dozen Lambdas, several dynamo tables, a few SQS queues, and two API Gateways.

We needed a way to deploy the entire system at once across different environments and we wanted to monitor the state of our infrastructure. Storing all of the source code for the pipeline in a single GitHub repo allowed us to easily deploy things together via our CI tool, and made it easier to share code between processes. CloudFormation was a great solution for managing infrastructure, but having everything in the same repo lead to an enormous YAML config file that was thousands of lines long.

We solved this by creating a separate config file for each Lambda function that included any direct dependencies and any event sources that fed into it. For example, a Lambda that consumed messages from SQS would have the definitions for that SQS queue stored in it’s config file. In our build process, our CI tool concatenates all of the YAML files together into a single config file, uses mustache to replace values that are specific to each environment, then deploys the whole file via CloudFormation.

This allows us to easily make edits to a Lambda function; keeping the configuration and input sources for that Lambda stored in the same folder as the source code. We’re currently looking at ways to further improve this process, like making each step a separate node module and creating a system for them to expose their infrastructure configuration requirements to the parent app. Our goal is to make each of these steps a portable, configurable unit allowing us to rapidly compose new pipelines when needed.

The Results

We have been running a few different systems using this micro pipeline pattern for several months now. We especially like the behavior of the micro pipelines when it comes to being able to react to incidents, like these two examples illustrate:

Early on, we had a network failure with a partner and had to handle 3 days worth of data in one day. We increased the auto-scaling threshold on the first two Lambda functions in our pipeline and were back on top of things in less than 24 hours. We didn’t have to redeploy and we didn’t have to be concerned with the costs of scaling our whole pipeline up, just the portions that were under additional load.

In a similar situation, one of the APIs we were calling had a breaking change causing things to backup. We deployed a small change to once service and were able to pick back up without starting everything at the beginning of the pipeline.

This pattern of decomposing large applications into a series of event-driven microservices has served our team well. The flexibility and fault tolerance of these systems have been great and our initial concerns about managing the complexity have been mostly painless thanks to solid infrastructure as code tools. As we replace legacy code and build new features, we will likely be leveraging this pattern more and we believe it will allow us to accelerate features as we build up a library of reusable microservices.

Please comment below if you’ve used similar patterns or have ideas on how to make it even better.

Image credit:

multicoloured-water-pipelines (from freepik.com)