At Meltwater, Elasticsearch is at the heart of our product - we’re constantly looking for ways to improve our usage of it and make it more performant. Recently we noticed when doing a routine rolling restart that the first backup taken after the restart took up to 7 hours instead of the normal 30 minutes. We also noticed that our snapshot storage suddenly increased in size by about 500TB. Elasticsearch performs incremental snapshots to only upload newly indexed data so both these observations were unexpected. There should have been no sudden change in the data caused by the restart. We took a closer look at this and were able to figure out what the problem was.

In Elasticsearch the data is divided into multiple shards that can be spread out on the different nodes in the cluster. For availability and scalability, each of these shards should have multiple copies. These copies are divided up into one primary shard, and the rest are replicas.

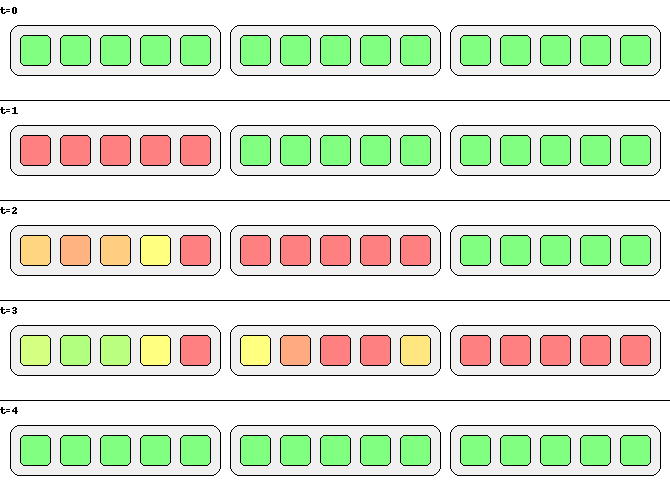

When a node is restarted, all primary shards on that node lose their primary status and a replica shard on another node gets promoted to primary instead. So during a rolling restart where all nodes are restarted, all primary shards will lose their primary status and become replicas instead. Some may become primary again when another node is restarted. Essentially, all primary shards are shuffled around. Note that no shards are moved, only their status as primary or replica is changed. An illustration of this can be seen at the end of this article.

Since the data is the same on all copies of a shard, it is enough to take a snapshot of one of the copies. Elasticsearch uses the primary shard to perform the snapshot operation. Each shard is made up of multiple immutable segments which is how the data is divided on disk. Indexing new data will create new segments. The segments are what Elasticsearch compares with previous snapshots to decide which segments need to be saved. These segments are not synchronized between copies of a shard. This means that when the “new” primary shard is snapshotted for the first time, none of its segments overlap with what is already in the snapshot storage and it has to do a full upload of the shard.

We store our snapshots on Amazon S3 using a combination of Standard and Infrequent Access storage classes. We store two weeks of snapshots which need to be stored for a minimum of 30 days to enable transitioning to Infrequent Access (we are currently measuring if this is the most cost-efficient solution). With this setup, we observed that it took ca. 40 days after the sudden increase of 500TB for it to drop again by the same amount. If these additional 500TB are stored for 30 days in S3 Standard and another 10 days in Infrequent Access, that sums up to a one-time cost of $12,500, something we felt should be possible to avoid for future restarts.

The solution

If all primary shards would remain on the same nodes after the rolling restart, the incremental snapshotting should continue working as usual and not be affected by a restart. Our idea of an algorithm was thus:

Disable snapshotting

Store a state of where all primaries are located

Perform rolling restart

Promote primaries back to their original node

Enable snapshotting

The only problem with this proposed solution was that Elasticsearch has no feature to explicitly promote a replica shard to become the primary. That process is only triggered when the primary shard is canceled, as during a restart [1] [2]. Canceling a primary shard however can be done explicitly using the cluster reroute API which then triggers a replica to be promoted to primary [3].

We tried this approach and it worked as suggested, canceling a primary shard resulted in a replica getting promoted to primary. However, in our cluster we have 3 copies of each shard, one primary and two replicas. We needed to control which one of the replicas would get promoted to primary, to select the one that was the primary before the rolling restart. This could be done by issuing two cancel commands in the same reroute call, canceling the two copies we did not want to be the primary. We also had a heuristic based on the observation that it is always the “first” replica that gets promoted to primary following the same order as the output of the cat shards API. This allowed us to only cancel one shard about half of the time. This worked pretty well but we noticed that the canceled shards were pretty slow to sync up to the new primary shard. We managed to speed this up by also issuing a flush call towards the affected indices before each batch cancellation.

We already had a Python script that we used to do our rolling restarts, so we added this new logic to that. First fetching the current state using the cat shards API before doing the restarts. After all restarts were completed the script selectively canceled shards until the primary shard placements were restored to where they were before the rolling restart. Using this script and sending up to 200 cancel commands in a single request to the cluster reroute API, we could allocate 14k misplaced primary shards back to their initial node in around 45 minutes. During this time, the cluster was yellow for most of the time since the canceled shards become unassigned for a brief moment, but cluster performance was not visibly impacted.

Illustration of a simulated rolling restart, one zone at a time. There are 5 nodes in each of the 3 zones. The color of a node indicates how many of its initial primary shards it has remaining (not related to Elasticsearch cluster status). A green node has all its initial primary shards and a red node only has replicas. At first, all nodes are green. In the next time steps as zones are restarted, each zone becomes red as all its shards lose their primary status and a replica in another zone is promoted, necessitating a new expensive snapshot. Some shards may get promoted back to primary on their original node by chance. After restarts have completed, we promote primary shards back to their original nodes, getting back to the initial state where incremental snapshots can continue.

Overall we are satisfied with our solution. We do feel that it is a hack as this is not how the cluster reroute API was intended to be used. A proper primary shard promotion API would make this process much smoother. We did briefly consider if it could be implemented as a plugin but it seemed difficult. So for now, this promotion through cancellation works for us, and we can do rolling restarts effectively for free.