Love it or hate it, CSV is a format many developers have to deal with at some stage. It’s lightweight and it’s understood by many platforms and humans. However, if you’re using non-ASCII characters (like an umlaut on the word über or accent in José) and you have users viewing your CSV files in Microsoft Excel, things get a bit more complicated.

Most Excel users open CSV files simply by double-clicking the file and waiting for Excel to import it automatically. But if Excel doesn’t decode the CSV file correctly, your data will end up as a garbled mess.

How do we avoid this when generating CSV files in Elixir?

–Deprecated Content–

Excel Friendly Exports are now handled natively in the nimble_csv libary.

You should now be able to avoid all the BOM logic by using the NimbleCSV.Spreadsheet config when defining the parser:

NimbleCSV.define(NimbleCSV.Spreadsheet, [])

The content below is no longer necessary, but may still help explain output issues some folks encounter when generating CSV output for Excel.

Generating CSV files in Elixir

We’re using the nimble_csv library from José Valim to generate CSV. It’s simple, lightweight and fast.

Here’s a basic example of using it to generate a CSV file:

# this is our NimbleCSV parser which we define inline for tab-delimited CSV

NimbleCSV.define(CSVParser, separator: "\t", escape: "\")

defmodule Demo.Users.CSVWriter do

@user_data [

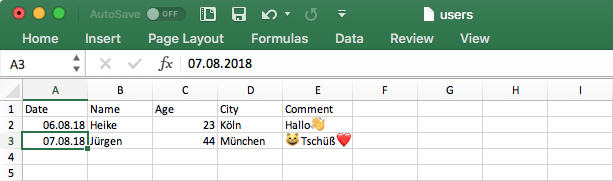

~w(Date Name Age City Comment),

~w(2018-08-06 Heike 23 Köln Hallo👋),

~w(2018-08-07 Jürgen 44 München 😸Tschüß❤️)

]

def write_csv_file() do

File.write!("users.csv", data_to_csv)

end

defp data_to_csv() do

@user_data

|> CSVParser.dump_to_iodata()

end

end

The dump_to_iodata() function takes a list of lists (representing a list of rows) and returns an enumerable of IO data which we write to a file.

Elixir ❤️ UTF-8

From Elixir’s Documentation:

A string is a UTF-8 encoded binary.

By default, elixir will read and write strings in UTF-8, so the CSV file we generated above will be UTF-8 encoded. That’s great for most platforms, but not for Microsoft Excel.

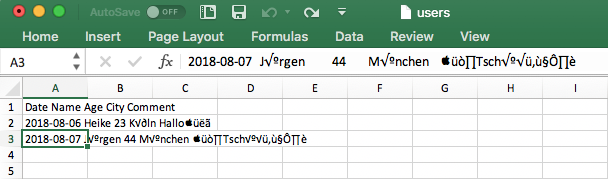

Opening our file in Excel, gives us something like this:

Excel, doesn’t realise the CSV file is UTF-8 encoded. It decodes the file incorrectly and leaves us with a mishmash of bad characters where non-ascii characters should be, and everything one column.

It is possible to tell Excel to interpret the CSV encoding correctly, by importing the file and setting the encoding to ‘UTF-8 Unicode’ using the Import Wizard. But many of our users don’t know that. We’d need to write some documentation to help them, which may be different for different Excel versions on different platforms. What if it was possible to help Excel figure out the CSV encoding?

Adding a UTF-8 BOM

After seeing the ugly mess Excel had made with my CSV file, I did some research and came across this Elixirforum thread. One of the suggestions was to add a BOM to the start of the file.

What’s a BOM?

From Wikipedia:

The byte order mark (BOM) is a Unicode character, U+FEFF byte order mark (BOM), whose appearance as a magic number at the start of a text stream can signal several things to a program consuming the text

Adding a BOM character at the start of the file can help Excel interpret the correct encoding for the CSV file. The unicode erlang module provides a handy function to generate BOM chars for different encodings.

To generate a UTF-8 BOM char we call the encoding_to_bom function with our encoding type:

iex(2)> :unicode.encoding_to_bom(:utf8)

"\uFEFF"

This function gives us the binary representation of the BOM character. We can add a BOM to the start of our CSV file to force Excel to decode the file as UTF-8.

Adding this to our CSV generation:

@bom :unicode.encoding_to_bom(:utf8)

def write_csv_file do

File.write!("users.csv", [@bom, data_to_csv])

end

defp data_to_csv do

@user_data

|> CSVParser.dump_to_iodata()

end

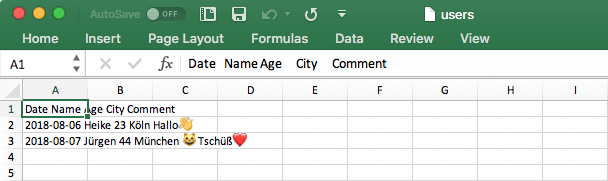

Opening the generated CSV file in Excel:

This time Excel has interpreted the file as UTF-8 encoded. It’s decoding our non-acsii characters, but it’s not picking up our tab delimiters so everything is in one column - what now?

Excel ❤️ UTF-16

Following some more research, I came across a helpful blog post from Jesse G. Donat, suggesting encoding CSV as UTF-16, specifically, tab delimited, UTF-16 little-endian. Okay, so how do we do that in Elixir?

I ⃞ :unicode

Once again, the erlang :unicode library helps us out. It provides a function to transform characters in one encoding, to a binary in another encoding. We can modify our CSV generation to generate binaries in UTF-16:

def write_csv_file do

File.write!("users.csv", [data_to_csv])

end

defp data_to_csv do

@user_data

|> CSVParser.dump_to_iodata()

|> :unicode.characters_to_binary(:utf8, {:utf16, :little})

end

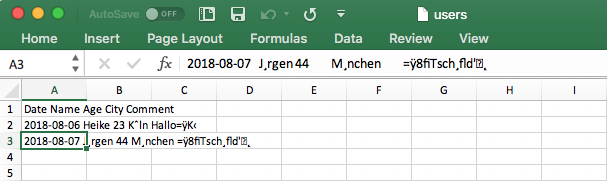

Opening the generated CSV file in Excel:

What? Now it’s even worse. There’s one last thing that’s needed..

Don’t forget the 💣!

We still need to add a BOM character to tell Excel this file is UTF-16 LE encoded:

@bom :unicode.encoding_to_bom({:utf16, :little})

def write_csv_file do

File.write!("users.csv", [@bom, data_to_csv])

end

defp data_to_csv do

@user_data

|> CSVParser.dump_to_iodata()

|> :unicode.characters_to_binary(:utf8, {:utf16, :little})

end

And finally our CSV file looks good in Excel! 🎉 Our non-ASCII characters are displayed correctly and our tab delimiters being detected to display our data in multiple columns:

Conclusion

Of course, if you’re generating data for Excel users, you’re better off outputting excel-specific files using a library such as elixlsx. But sometimes you need Excel and CSV files to play nicely together, and to do that you need to encode your CSV files using tab-delimited, UTF-16 little-endian encoding. Adhering to this should ensure your CSV is handled correctly by Excel, and still work fine in other CSV readers.

Hopefully, the details above will save you the searching I went through to figure out how to do this with Elixir.