Have you implemented a system that is supposed to perform tasks at regular intervals? Does the repeated failure of such a system pose a threat to your quality of service? If so, I am sure you would want to be alerted, if your system suddenly stops performing these tasks.

We at Meltwater’s Premium Content team had this exact requirement. In this post we will share how we used Cloudwatch, to monitor the heartbeat of our system.

Our Use Case

We use Amazon Cloudwatch as a logging solution for most of our services. We then configure alarms on these logs to alert the team in case of any service malfunctioning. This type of Cloudwatch-based monitoring helps us identify any kind of threshold breach, e.g. if the volume of an incoming document stream drops below a defined threshold or if a specific type of error occurs more often than usual. In other words, this approach provides a way to alert on events that have occurred.

But what about our use case - to be alerted on events that were expected to occur but did not occur? As it turns out, Cloudwatch can do that too.

For a bit of context: Our team is responsible for bringing new content from various premium providers into Meltwater. We also need to track usage statistics for this content. To prevent any data loss, we need to take continuous backups, which we decided to store in S3. These backups are so crucial for us that we needed to monitor the correct generation of said backups.

Trying Out Various Approaches

We had already implemented a monitoring-service in golang that kept an eye on our reporting solutions and checked the responsiveness of the corresponding APIs. It also verified that daily reports were generated when they should be. (Also see the write-up about a very similar synthetic monitoring approach by Meltwater’s API team.)

Our first approach was to extend the same service to monitor our backups too. However, we ran into performance issues during testing. The rate of growth of backup objects in S3 was simply too high to be monitored by our monitoring-service.

Our second approach was to use S3 notifications. AWS lets you configure event notifications on S3 buckets. Depending on your configuration, each time an object gets added to the bucket on a specified prefix, S3 can invoke a Lambda function or send an SQS message or notify an SNS topic of your choice. The notification contains basic information of the event along with the key of the newly added object. This feature is really handy, when you are required to perform some action for handling the creation of each object in the bucket, but it’s a bit of an overkill if you only want to track whether the event occurred or not. So, once again we searched for something simpler.

Discovering S3 Bucket Metrics

Finally we discovered S3 Bucket Metrics. We found that this approach was the simplest and most suitable for our use case. Like most of the AWS resources, S3 has its own metric namespace which contains standard metrics for each bucket.

Here is how we set up alerting on our backups using terraform:

Track all request metrics of the bucket, for a given prefix

resource "aws_s3_bucket_metric" "backups" {

bucket = "${aws_s3_bucket.reporting-api.bucket}"

name = "backups"

filter {

prefix = "/backup"

}

}

If you use Opsgenie for alert management just like us, create an Opsgenie integration using SNS

resource "aws_sns_topic" "ops_genie_integration" {

name = "ops_genie_integration"

}

resource "aws_sns_topic_subscription" "ops_genie_subscription" {

topic_arn = "${aws_sns_topic.ops_genie_integration.arn}"

protocol = "https"

endpoint = "https://api.opsgenie.com/v1/json/Cloudwatch?apiKey=<api_key>"

endpoint_auto_confirms = "true"

}

Configure Cloudwatch alarm based on occurrence of PutRequest to the reporting bucket

resource "aws_cloudwatch_metric_alarm" "backups_missing" {

alarm_name = "backups_missing"

comparison_operator = "LessThanThreshold"

evaluation_periods = "6"

metric_name = "PutRequests"

namespace = "AWS/S3"

period = "300"

threshold = "1"

datapoints_to_alarm = "6"

statistic = "Maximum"

dimensions {

BucketName = "reporting-api"

FilterId = "backups"

}

alarm_description = "Missing backups since last 30 mins"

actions_enabled = true

treat_missing_data = "breaching"

alarm_actions = ["${aws_sns_topic.ops_genie_integration.arn}"]

ok_actions = ["${aws_sns_topic.ops_genie_integration.arn}"]

}

The key setting here is the treat_missing_data option that tells Cloudwatch how to handle cases where datapoints aren’t received from S3. When set to “breaching”, Cloudwatch treats missing datapoints as a breach of threshold and invokes the configured alarm_action. The datapoints_to_alarm option tells Cloudwatch how many data points need to satisfy the breach condition, before changing the state to “ALARM”.

It is important to note that the breaching data points need not be consecutive for the alarm to be created. Cloudwatch evaluates the alarm condition for any m out of last n data points (where m = datapoints_to_alarm and n = evaluation_periods). The trick is to set m=n so that it can identify consecutive failures. In our case, the backups mechanism can produce a maximum of 6 data points in 30 minutes, so the alarm condition evaluates to “If 6 out of 6 data points in last 30 minutes are missing => ALARM”.

By setting the period of the metric equal to the backups creation interval (300 seconds) and the threshold to 1, Cloudwatch expects at least 1 data point to be present in the last 300 seconds. Our system is allowed to skip a few “beats” intermittently, as long as it is able to recover within 30 minutes.

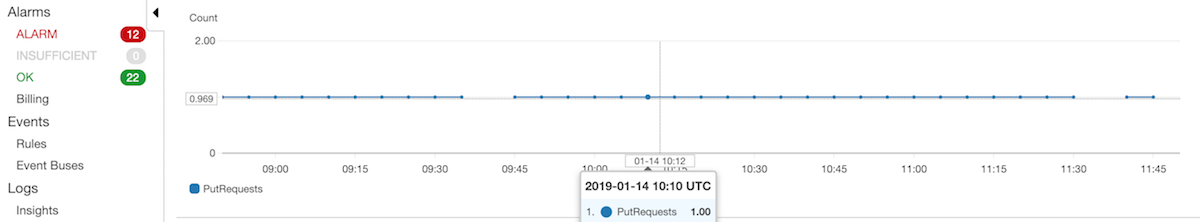

Here is what normal operation looks like, when you view the bucket metric in Cloudwatch. Backups are being created at a steady frequency with some intermittent ones missing.

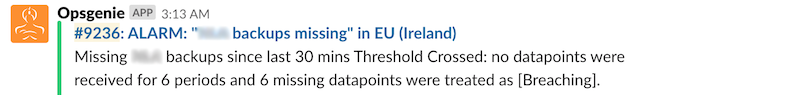

When, for some reason, backups are unable to recover from the skipped beat for more than 30 minutes, the configured alarm_action invokes the Opsgenie SNS topic and voilà:

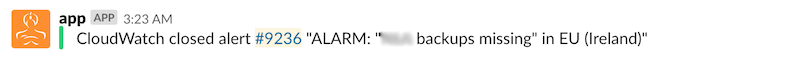

The Cloudwatch-Opsgenie integration provides a really convenient feature - Opsgenie can create & close alerts automatically based on the corresponding alarm state transitions on Cloudwatch. So once the backups recover themselves, Opsgenie will resolve the alert as shown below:

In Closing

If you have any services running on AWS that have a heartbeat you can implement this approach quite easily too. If you have implemented similar solutions to monitor your system’s heartbeat, or have found better ways to accomplish the same, we would love to hear about them in the comments below.

Image credits: